LANCAR: Leveraging Language for Context-Aware Robot Locomotion in Unstructured Environments

Abstract

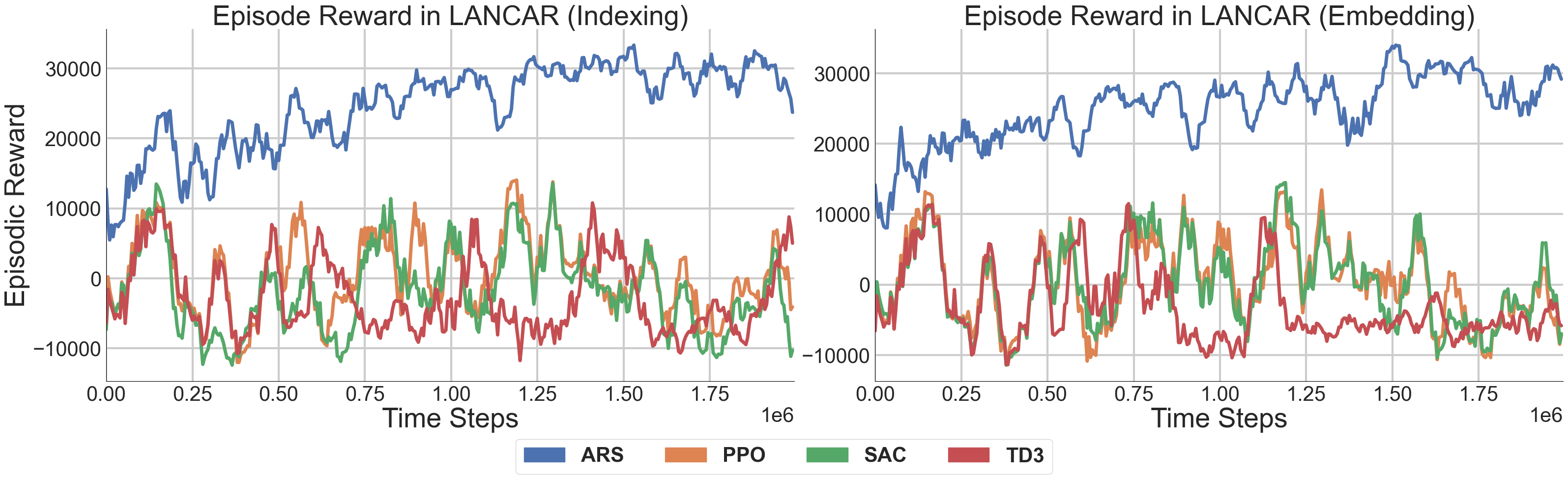

Navigating robots through unstructured terrains is challenging, primarily due to the dynamic environmental changes. While humans adeptly navigate such terrains by using context from their observations, creating a similar context-aware navigation system for robots is difficult. The essence of the issue lies in the acquisition and interpretation of contextual information, a task complicated by the inherent ambiguity of human language. In this work, we introduce LANCAR , which addresses this issue by combining a context translator with reinforcement learning (RL) agents for context-aware locomotion. \ours{} allows robots to comprehend contextual information through Large Language Models (LLMs) sourced from human observers and convert this information into actionable contextual embeddings. These embeddings, combined with the robot's sensor data, provide a complete input for the RL agent's policy network. We provide an extensive evaluation of LANCAR under different levels of contextual ambiguity and compare with alternative methods. The experimental results showcase the superior generalizability and adaptability across different terrains. Notably, LANCAR shows at least a 7.4\% increase in episodic reward over the best alternatives, highlighting its potential to enhance robotic navigation in unstructured environments.

Method

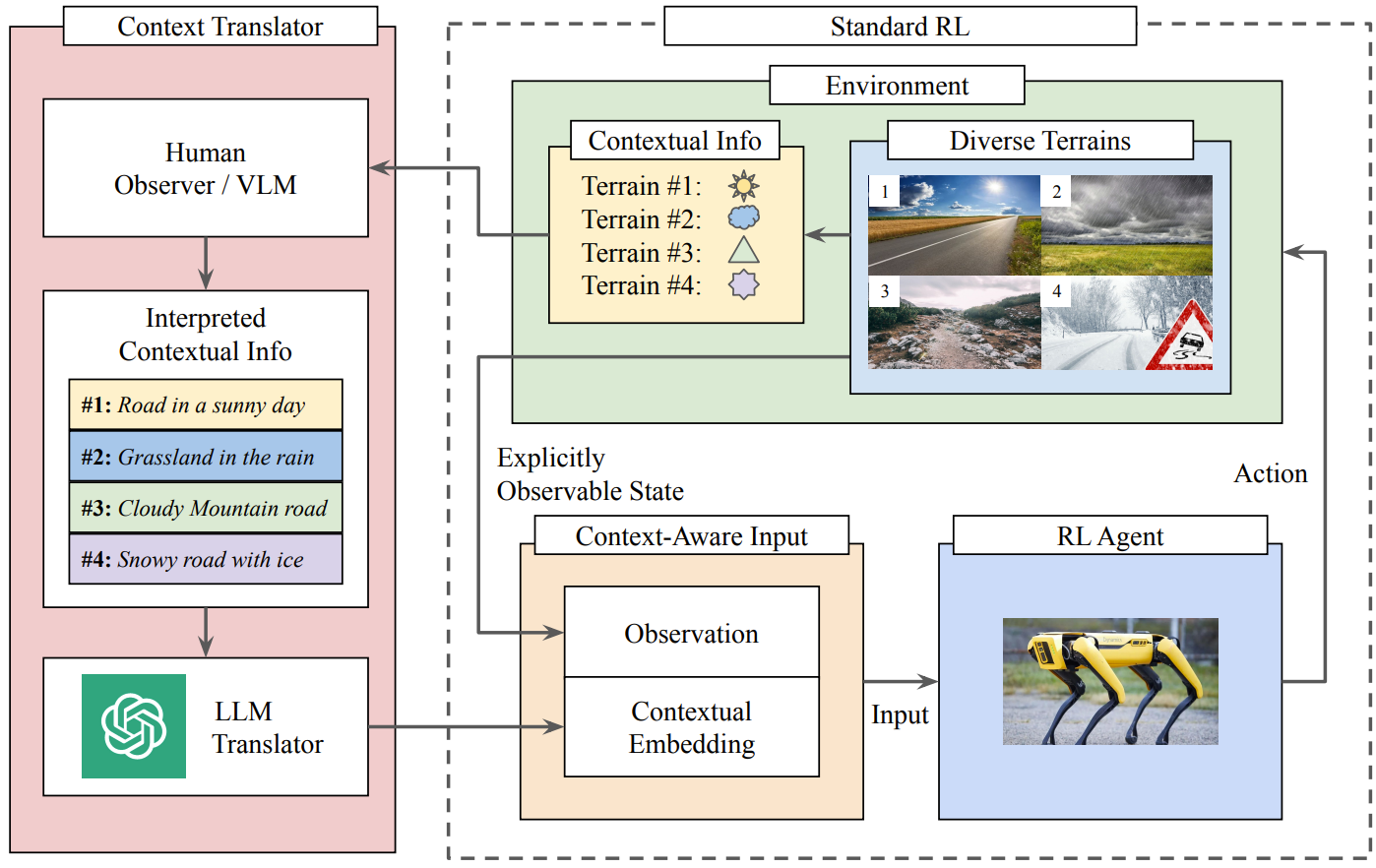

Context-Aware Reinforcement Learning Robot Locomotion. Our framework introduces a context translator aside from the standard RL framework. For the environment with diverse terrains, the agent gets the explicitly observable state as the observation, and the human observer (or VLM) perceives the context information as the implicitly observable state. The human observer (or VLM) interprets the contextual information to the LLM translator. The LLM translator extracts the environmental properties from the con- textual information and generates the contextual embedding, which is concatenated with the observations as the input for RL agents. RL agents produce the action using their control policies given the context-aware inputs and execute the action in the environment.

Simulation

Low Friction High Elastic Ground

No-Context

LANCAR (Indexing)

LANCAR (Embeddings)

SAC

TD3

PPO

Results

We perform evaluation experiments across all baselines and ablation studies over 10 cases (5 low-level context cases and 5 high-level context cases). ARS-based approaches achieve much higher episodic rewards than all other baselines. ARS using LANCAR embeddings for contextual information have a better performance than all other approaches in most cases

| Total | Rewards | in 10^3 | (5000 | steps) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Backbone | A | B | C | D | E | F | G | H | I | J |

| ARS | 36.628 | 19.698 | 38.000 | 28.573 | 30.744 | 35.545 | 13.051 | 29.819 | 34.053 | 33.934 | |

| No-Contex | SAC | 24.189 | -10.128 | 15.5710 | -10.839 | -11.457 | 9.461 | -7.123 | -10.076 | 18.252 | -3.994 |

| TD3 | 25.001 | -6.756 | 17.768 | -12.230 | -11.726 | 9.833 | -9.445 | -12.450 | 19.352 | -3.583 | |

| PPO | 7.542 | -8.266 | -1.249 | -10.159 | -10.073 | 4.534 | -7.262 | -10.637 | 15.798 | -2.181 | |

| ARS | 36.659 | 23.435 | 38.366 | 20.649 | 22.952 | 37.791 | 16.265 | 22.776 | 36.676 | 35.257 | |

| LANCAR (Indexing) | SAC | 16.423 | -9.695 | 14.534 | -12.199 | -12.443 | 7.521 | -7.592 | -12.012 | 16.252 | -5.815 |

| TD3 | 20.867 | -7.665 | 15.734 | -11.672 | -11.612 | 7.955 | -7.328 | -13.414 | 17.089 | -4.131 | |

| PPO | 24.119 | -8.343 | 11.851 | -8.520 | -9.498 | 10.937 | -10.980 | -10.333 | 19.934 | -2.009 | |

| ARS | 41.220 | 20.706 | 41.725 | 29.545 | 31.595 | 40.563 | 12.162 | 30.961 | 39.722 | 36.623 | |

| LANCAR(Embeddings) | SAC | 12.154 | -8.648 | 17.251 | -9.413 | -11.159 | 8.381 | -7.197 | -12.599 | 16.176 | -5.970 |

| TD3 | 20.714 | -8.655 | 17.788 | -9.138 | -11.022 | 8.587 | -6.465 | -12.478 | 15.772 | -6.800 | |

| PPO | 12.979 | -9.449 | 5.512 | -9.187 | -10.314 | 8.345 | -9.533 | -9.391 | 15.607 | -8.148 |

Related Works

Learning to Prompt for Vision-Language Models. Kaiyang Zhou, Jingkang Yang, Chen Change Loy, Ziwei Liu. IJCV 2022.

Vision-and-Language Navigation: Interpreting visually-grounded navigation instructions in real environments. Peter Anderson, Qi Wu, Damien Teney, Jake Bruce, Mark Johnson, Niko Sünderhauf, Ian Reid, Stephen Gould, Anton van den Hengel. CVPR 2018.

RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control . Anthony Brohan, Noah Brown, Justice Carbajal, Yevgen Chebotar, Xi Chen, Krzysztof Choromanski, Tianli Ding, Danny Driess, Avinava Dubey, Chelsea Finn, Pete Florence, Chuyuan Fu, Montse Gonzalez Arenas, Keerthana Gopalakrishnan, Kehang Han, Karol Hausman, Alexander Herzog, Jasmine Hsu, Brian Ichter, Alex Irpan, Nikhil Joshi, Ryan Julian, Dmitry Kalashnikov, Yuheng Kuang, Isabel Leal, Lisa Lee, Tsang-Wei Edward Lee, Sergey Levine, Yao Lu, Henryk Michalewski, Igor Mordatch, Karl Pertsch, Kanishka Rao, Krista Reymann, Michael Ryoo, Grecia Salazar, Pannag Sanketi, Pierre Sermanet, Jaspiar Singh, Anikait Singh, Radu Soricut, Huong Tran, Vincent Vanhoucke, Quan Vuong, Ayzaan Wahid, Stefan Welker, Paul Wohlhart, Jialin Wu, Fei Xia, Ted Xiao, Peng Xu, Sichun Xu, Tianhe Yu, Brianna Zitkovich. 2023