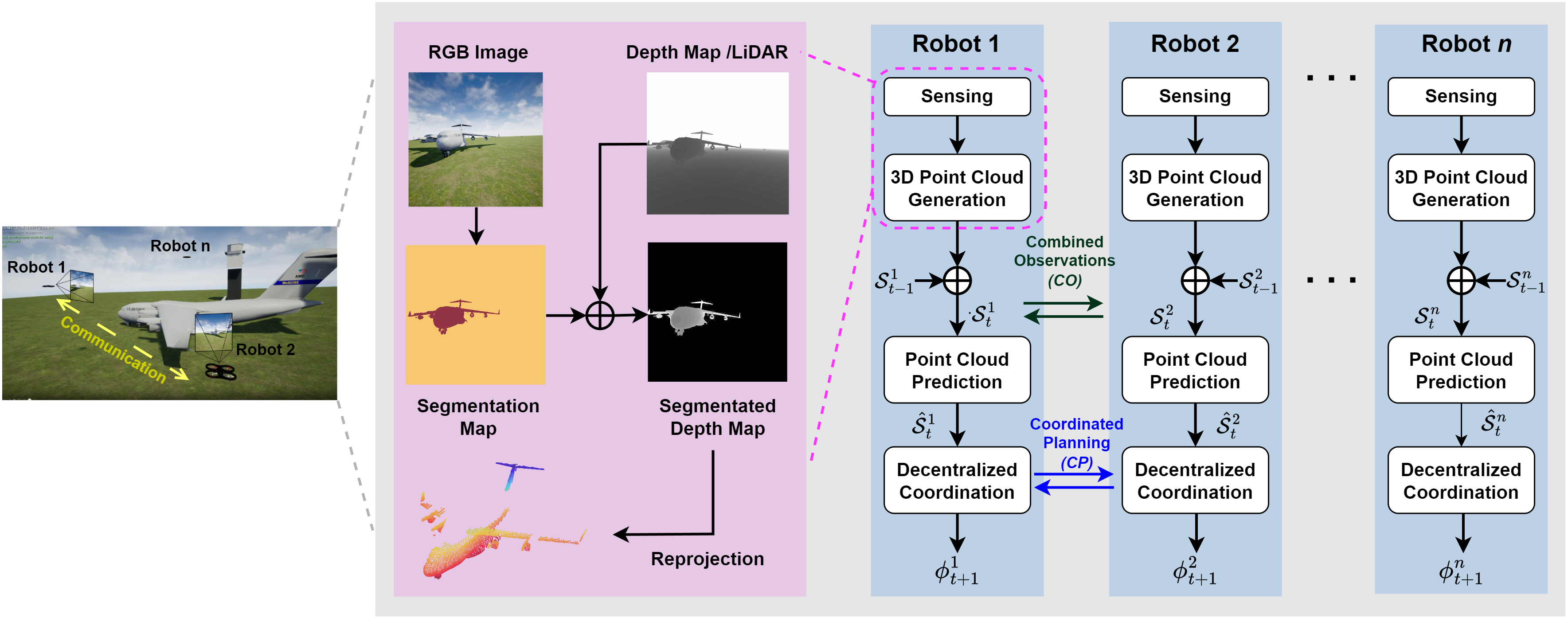

Next-Best View (NBV) planning is a long-standing problem of determining where to obtain the next best view of an object from, by a robot that is viewing the object. There are a number of methods for choosing NBV based on the observed part of the object. In this paper, we investigate how predicting the unobserved part helps with the efficiency of reconstructing the object. We present, Multi-Agent Prediction-Guided NBV (MAP-NBV), a decentralized coordination algorithm for active 3D reconstruction with multi-agent systems. Prediction-based approaches have shown great improvement in active perception tasks by learning the cues about structures in the environment from data. But these methods primarily focus on single-agent systems. We design a next-best-view approach that utilizes geometric measures over the predictions and jointly optimizes the information gain and control effort for efficient collaborative 3D reconstruction of the object. Our method achieves 19% improvement over the non-predictive multi-agent approach.